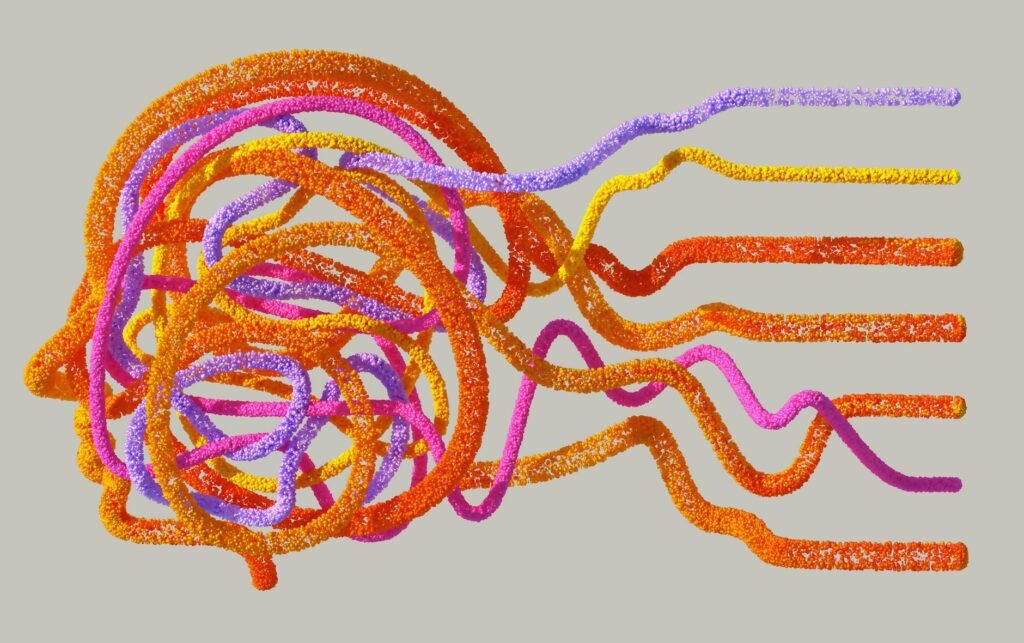

Welcome to the era of artificial intelligence, a term that frequently appears in headlines, fuels innovation, and sometimes even sparks fear. From powering your smartphone’s voice assistant to driving medical breakthroughs, AI is no longer a futuristic concept but a tangible force shaping our present. Yet, for many, the inner workings of AI remain shrouded in mystery, often perceived as an overly complex or even arcane subject. This beginner’s guide aims to pull back the curtain, demystifying artificial intelligence by explaining its fundamental principles, exploring how it learns, and illustrating its widespread applications across various aspects of our lives. Prepare to embark on a journey that will clarify what AI truly is, how it functions, and why understanding it is becoming increasingly essential in our interconnected world.

What exactly is artificial intelligence?

At its core, artificial intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. It is a broad field of computer science dedicated to creating machines that can perform tasks normally requiring human intelligence, such as learning, problem solving, understanding language, making decisions, and even perceiving their environment. Unlike traditional programming where rules are explicitly coded, AI systems are designed to adapt and learn from data, improving their performance over time without needing constant manual adjustments.

The concept of AI is often categorized into two main types: narrow AI (also known as weak AI) and general AI (or strong AI). Currently, all the AI we interact with falls under the umbrella of narrow AI. This type of AI is designed and trained for a specific task or a narrow set of tasks. Examples include recommendation engines that suggest products you might like, spam filters that identify unwanted emails, or medical diagnostic tools that analyze symptoms. These systems excel at their designated function but lack any broader cognitive abilities or consciousness. General AI, on the other hand, refers to hypothetical machines that would possess human-level cognitive abilities across a wide range of tasks, capable of learning, understanding, and applying intelligence to any intellectual problem, much like a human would. While the pursuit of general AI is a long-term goal for researchers, current technological advancements primarily focus on refining and expanding the capabilities of narrow AI.

How AI learns: The basics of machine learning

The primary method through which modern AI systems learn and improve is a subfield called machine learning (ML). Machine learning algorithms enable computers to learn patterns and make predictions or decisions from data without being explicitly programmed for every specific outcome. Instead of being given a set of fixed rules, an ML model is “trained” on large datasets, allowing it to identify correlations, infer relationships, and make data-driven decisions. This process involves feeding the algorithm an abundance of information, allowing it to discern underlying structures and apply that knowledge to new, unseen data.

There are three primary paradigms of machine learning:

- Supervised learning: This is the most common type, where the algorithm learns from a labeled dataset. This means the input data (features) is paired with the correct output (labels). For example, a system trained to identify cats in images would be fed thousands of images labeled as “cat” or “not cat.” The algorithm learns the mapping from input to output, and once trained, it can predict the label for new, unlabeled images. Applications include image recognition, spam detection, and predictive analytics.

- Unsupervised learning: In contrast, unsupervised learning deals with unlabeled data. The algorithm’s goal is to find hidden patterns, structures, or relationships within the data on its own. It’s like asking the machine to organize a pile of diverse objects without telling it what each object is. Common tasks include clustering (grouping similar data points, e.g., customer segmentation) and dimensionality reduction (simplifying data while retaining important information).

- Reinforcement learning: This approach involves an agent learning to make decisions by performing actions in an environment to maximize a cumulative reward. The agent receives feedback in the form of rewards or penalties for its actions, similar to how a human learns through trial and error. This type of learning is particularly effective for tasks like game playing (e.g., AlphaGo), robotics, and autonomous navigation, where the optimal path or strategy is not immediately obvious.

Within machine learning, deep learning is a powerful subset that uses artificial neural networks with multiple layers (hence “deep”) to learn complex patterns from data. Inspired by the human brain’s structure, deep learning has driven significant advancements in areas like natural language processing and advanced image recognition due to its ability to automatically extract hierarchical features from raw data.

Common applications of AI in our daily lives

AI’s influence stretches far beyond theoretical discussions, permeating countless aspects of our daily existence, often without us even realizing it. From the moment we wake up until we go to sleep, AI-powered systems are at work, making our lives more convenient, efficient, and sometimes, just plain easier. These applications highlight the practical benefits of narrow AI, demonstrating its versatility across various industries and personal technologies.

Consider the following widespread applications:

- Personalized recommendations: Streaming services like Netflix, e-commerce giants like Amazon, and music platforms like Spotify all use AI to analyze your past behavior and preferences, suggesting movies, products, or songs you’re likely to enjoy.

- Voice assistants and natural language processing (NLP): Siri, Alexa, and Google Assistant are prime examples of AI in action. They use NLP to understand spoken commands, process information, and respond intelligently, enabling hands-free operation of devices and access to a vast amount of information.

- Spam filters and fraud detection: Your email inbox is protected by AI algorithms that analyze incoming messages for characteristics indicative of spam or phishing attempts, effectively filtering them out. Similarly, banks use AI to detect unusual transaction patterns that might signify fraudulent activity, safeguarding your finances.

- Autonomous vehicles: Self-driving cars rely heavily on AI to perceive their surroundings through sensors, interpret road conditions, make real-time decisions, and navigate complex environments, aiming to enhance safety and efficiency in transportation.

- Healthcare advancements: AI is revolutionizing medicine, assisting in disease diagnosis (e.g., identifying anomalies in X-rays or MRIs), accelerating drug discovery by analyzing vast molecular datasets, and personalizing treatment plans for patients.

Here’s a brief overview of some key AI applications and their underlying AI types:

| Application Area | Example Use Case | Primary AI Type Utilized |

|---|---|---|

| Customer Service | Chatbots for instant support | Natural Language Processing, Supervised Learning |

| Finance | Fraud detection in transactions | Supervised Learning, Anomaly Detection |

| Healthcare | Medical image analysis for diagnosis | Deep Learning, Supervised Learning |

| Retail | Personalized product recommendations | Supervised Learning, Collaborative Filtering |

| Transportation | Path planning for autonomous vehicles | Reinforcement Learning, Computer Vision |

Beyond the hype: Understanding AI’s capabilities and limitations

While AI’s advancements are undeniably impressive, it is crucial to approach the technology with a balanced perspective, acknowledging both its transformative capabilities and its inherent limitations. Understanding these boundaries helps manage expectations and fosters a more informed discussion about AI’s role in society.

AI systems excel at tasks that involve processing vast amounts of data, identifying intricate patterns, and performing repetitive actions with speed and accuracy far beyond human capacity. Their capabilities include:

- Efficiency and automation: AI can automate mundane, time-consuming tasks, freeing up human workers for more creative or complex problem-solving.

- Data analysis: It can uncover insights and correlations in massive datasets that would be impossible for humans to find, leading to better decision-making in business, science, and governance.

- Prediction and optimization: AI models can forecast future trends, optimize logistics, and improve resource allocation across various sectors.

- Personalization: By understanding individual preferences, AI can tailor experiences, from content recommendations to customized learning paths.

However, AI is not a panacea, and it comes with significant limitations:

- Lack of true understanding and common sense: Current AI, being narrow AI, does not possess consciousness, empathy, or general common-sense reasoning. It processes data and executes algorithms; it doesn’t “understand” the world in the way humans do.

- Dependency on data quality: AI models are only as good as the data they are trained on. Biased, incomplete, or inaccurate data will lead to biased or flawed AI outputs, perpetuating inequalities or making incorrect decisions.

- Ethical concerns: Issues like privacy infringement (due to data collection), algorithmic bias (leading to unfair outcomes for certain groups), job displacement, and the “black box” problem (where complex deep learning models are hard to interpret) pose significant ethical challenges that require careful consideration and regulation.

- Lack of creativity and intuition: While AI can generate novel combinations of existing data, it lacks genuine human creativity, intuition, and the ability to innovate truly new concepts outside its training domain.

Ultimately, AI is a powerful tool, but it remains a tool. Its effectiveness and ethical impact depend on how humans design, implement, and govern it. It augments human capabilities rather than fully replacing them, demanding a future where human intelligence and artificial intelligence collaborate to solve complex global challenges.

We’ve embarked on a journey to demystify artificial intelligence, moving from its abstract definitions to its concrete applications and ethical considerations. We began by establishing what AI truly is—the emulation of human-like intelligence in machines, primarily manifested today as narrow AI. We then delved into the core mechanism of its learning: machine learning, explaining how supervised, unsupervised, and reinforcement learning paradigms enable systems to extract insights from data. Our exploration continued with a look at AI’s ubiquitous presence in daily life, from personalized recommendations and voice assistants to critical applications in healthcare and transportation, highlighting its transformative power across industries. Finally, we balanced the narrative by examining AI’s impressive capabilities alongside its crucial limitations, emphasizing the need for a nuanced understanding that acknowledges both its potential and its ethical challenges.

The final conclusion is clear: AI is not a distant, futuristic concept but a vital technology evolving rapidly and reshaping our world. Understanding its fundamentals is no longer just for tech enthusiasts; it’s a foundational literacy for navigating an increasingly automated and data-driven future. As AI continues to advance, fostering critical thinking, promoting ethical development, and encouraging human-AI collaboration will be paramount. By embracing this knowledge, we can collectively work towards leveraging AI’s immense potential for the betterment of society, ensuring its development is aligned with human values and serves to augment our collective intelligence.

Image by: Google DeepMind

https://www.pexels.com/@googledeepmind